CECAM Workshop – Modeling & Simulation of Fluid-Structure Interactions Across Scales

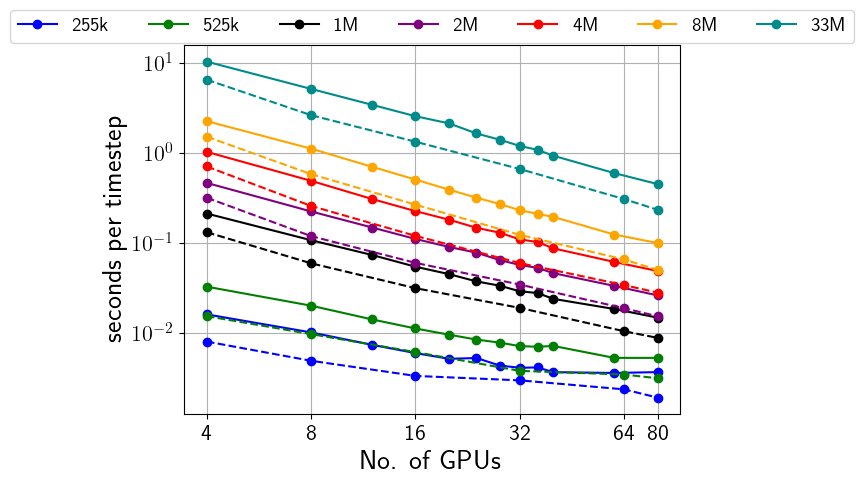

We are pleased to invite you to the workshop Modeling & Simulation of Fluid-Structure Interactions Across Scales, taking place on April 8–11, 2025, at National Institute of Chemistry, Ljubljana, Slovenia.This workshop will bring together experts and researchers to discuss recent advances in hydrodynamics modeling, multiscale coupling methods, AI-driven approaches, large-scale simulations, and HPC technologies. The program will feature lectures, discussions, and hands-on sessions covering: Invited Speakers: The workshop is free of charge. To register and more details, please visit https://www.cecam.org/workshop-details/modeling-simulation-of-fluid-structure-interactions-across-scales-1435. Abstract submission for poster presentations is open until March 14, 2025. Looking forward to your participation!